A blog with news and curiosity on genomics subjects with a particular interest for topics related to Next Generation Sequencing, Personal Genomics and Bioinformatics. We work at the University of Brescia (Italy) and are new in the field but with a lot of energy to share.

Monday, 30 January 2012

NHGRI presents Current Topics in Genome Analysis 2012

Friday, 27 January 2012

What is a ... "MitoExome"?

The latest issue of Science Translational Medicine reports an article describing the target sequencing of the mitochondrial DNA and exons of 1037 nuclear genes encoding mitochondrial proteins in 42 unrelated infants with clinical and biochemical evidence of mitochondrial oxidative phosphorylation disease. 10 patients had mutations in genes previously linked to disease while 13 had mutations in nuclear genes not previously linked to disease. The pathogenicity of two such genes, NDUFB3 and AGK, was supported by complementation studies and evidence from multiple patient. For the other half of the patients studied, the genetic mutations causing mitochondrial disorders remain unknown.

The latest issue of Science Translational Medicine reports an article describing the target sequencing of the mitochondrial DNA and exons of 1037 nuclear genes encoding mitochondrial proteins in 42 unrelated infants with clinical and biochemical evidence of mitochondrial oxidative phosphorylation disease. 10 patients had mutations in genes previously linked to disease while 13 had mutations in nuclear genes not previously linked to disease. The pathogenicity of two such genes, NDUFB3 and AGK, was supported by complementation studies and evidence from multiple patient. For the other half of the patients studied, the genetic mutations causing mitochondrial disorders remain unknown.Thursday, 26 January 2012

"If you can't beat them, join them!" (or... buy them): Roche's hostile bid for Illumina.

"If you can't beat them, join them!" is a proverb often used in politics and war. In this case we use it for a financial strategy chosen by the "commanders" of Roche. Some rumors started to appear in the past weeks, but now Roche comes out with an official bid of (please take a sit) $5.7 billions to acquire Illumina, more precisesly $44.50 per share in cash, an 18 percent premium over Illumina's closing share price of $37.69 yesterday.

Apparenlty Roche started to negotiate silently with Illumina to find a way to reach a deal, but Illumina decided to refuse any kind of offer. Franz Humer, Roche’s chairman, decided, after numerous efforts, to release a public letter to Illumina chief executive and chairman Jay Flatley bemoaning “the lack of any substantive progress in our efforts to negotiate a business combination between Illumina and Roche” and a January 18 letter confirmed a lack of interest by Illumina’s board.

Tuesday, 24 January 2012

More than 50 millions unique variants in dbSNP

Today the MassGenomics blog reports an EXCELLENT survey of the current state of dbSNP written by Dan Koboldt.

Friday, 20 January 2012

VarSifter: a useful software to manage NGS data

Thursday, 19 January 2012

60 genomes in the clouds....

The diffusion of NGS technology has made clear that no one has the ability to analyze in depth all the data produced in large scale WGS or WES projects.

Wednesday, 18 January 2012

BarraCUDA

BarraCUDA is a GPU-accelerated short read DNA sequence alignment software based on Burrows-Wheeler Aligner (BWA).

BarraCUDA is a GPU-accelerated short read DNA sequence alignment software based on Burrows-Wheeler Aligner (BWA).Flash Report: most impressive NGS papers of 2011

Kevin Davies, founding editor of Nature Genetics and Bio-IT World, indicates in the NGS Leaders Blog some of the top NGS papers published in 2011.

Kevin Davies, founding editor of Nature Genetics and Bio-IT World, indicates in the NGS Leaders Blog some of the top NGS papers published in 2011. Saturday, 14 January 2012

Freely "Explore" the Pediatric Cancer Genome Project data

St. Jude Children’s Research Hospital announced in a press release the launch of “Explore”, a freely available website for published research results from the St. Jude Children’s Research Hospital – Washington University Pediatric Cancer Genome Project (PCGP). The PCGP is the largest effort to date aimed at sequencing the entire genomes of both normal and cancer cells from pediatric cancer patients, comparing differences in the DNA to identify genetic mistakes that lead to childhood cancers (see my previous post).

St. Jude Children’s Research Hospital announced in a press release the launch of “Explore”, a freely available website for published research results from the St. Jude Children’s Research Hospital – Washington University Pediatric Cancer Genome Project (PCGP). The PCGP is the largest effort to date aimed at sequencing the entire genomes of both normal and cancer cells from pediatric cancer patients, comparing differences in the DNA to identify genetic mistakes that lead to childhood cancers (see my previous post).Pediatric Cancer Genome Project

Two years ago St. Jude Children's Research Hospital and Washington University School of Medicine in St. Louis announced a $65-million, three-year joint effort to identify the genetic changes that give rise to some of the world's deadliest childhood cancers. The aim was to decode the genomes of more than 600 childhood cancer patients. No one had sequenced a complete pediatric cancer genome prior to the PCGP, which has sequenced more than 250 sets to date.

Two years ago St. Jude Children's Research Hospital and Washington University School of Medicine in St. Louis announced a $65-million, three-year joint effort to identify the genetic changes that give rise to some of the world's deadliest childhood cancers. The aim was to decode the genomes of more than 600 childhood cancer patients. No one had sequenced a complete pediatric cancer genome prior to the PCGP, which has sequenced more than 250 sets to date.In the other study (A novel retinoblastoma therapy from genomic and epigenetic analyses) investigators sequenced the tumors of four young patients with retinoblastoma, a rare childhood tumor of the retina of the eye. The finding also led investigators to a new treatment target and possible therapy.

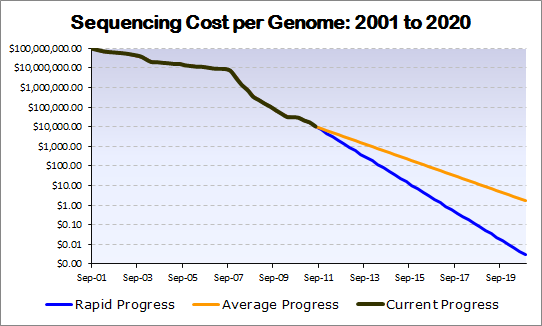

A "negative cost" for the genome in the future?

Friday, 13 January 2012

NGS: a ramp up for Stem Cells?

NGS techniques are rapidly changing many aspects of the research world. Their wide potential applications are many and probably not all are already known.On the new Nature Biotechnology issue (January 2012), N.D. DeWitt et al describe one of the most challenging and interesting support that NGS techniques can offer in biomedical research: an engagement in the Stem Cell field. “There is an urgent need”, DeWitt says, “to ramp up the efforts to establish stem cell as a leading model system for understanding human biology and disease states.” Several analysis on human iPS cells (hiPSCs) and on human ES cells (hESCs) detected structural and sequence variations under some culture conditions. It is not yet clear if these variations are present in the original cell (for hiPSCs) or if they are due to the process of deriving cells or even to the culture conditions. What is clear is the need to understand what causes the variations and to determine what kind of changes in cellular behaviour these variations lead to.

Thursday, 12 January 2012

AmpliSeq Inherited Disease Gene Panel

A $1 genome by 2017?

Flash Report: Cancer Genome and Exome Sequencing in 2011

Wednesday, 11 January 2012

An exciting new year

The NASDAQ response: Illumina vs LIFE

Flash Report: another "Genome in a Day" DNA sequencer announced yesterday

It comes at no surprise that yesterday also Illumina had a press release introducing the HiSeq 2500, an evolution of their HiSeq 2000 platform that will enable researchers and clinicians to sequence a “Genome in a Day”.

Our 2 cents: "the Chip is (not) the machine"

Tuesday, 10 January 2012

How the little ones can do it well in NGS

Breaking News: "Ion Proton", the evolution of the Ion Torrent PGM

This is a big news in the NGS market. Today Life Technologies announced the Ion Proton, an evolution of the Ion Torrent PGM designed to sequence the entire human genome in a day for $1,000.

Monday, 9 January 2012

Flash Report: Survey of 2011 NGS market

Jeffrey M. Perkel discuss in a Biocompare editorial article the events that shaped the next-gen sequencing market in 2011.

Flash Report: 3000 human genomes delivered in 2011 by Complete Genomics

Complete Genomics today announced that the company delivered approximately 3,000 genomes to its customers in 2011 and is entering 2012 with contracts for approximately 5,800 genomes.

Complete Genomics today announced that the company delivered approximately 3,000 genomes to its customers in 2011 and is entering 2012 with contracts for approximately 5,800 genomes.

Flash Report: Life Technologies press release on Ion Torrent new products and protocols

Today Life Technologies Corporation announced several new products and protocols to improve workflows for Ion Torrent DNA sequencing applications. You can read the press release here.

Today Life Technologies Corporation announced several new products and protocols to improve workflows for Ion Torrent DNA sequencing applications. You can read the press release here.

Flash Report: six months of Ion Torrent

Flash Report: pair ends on the Ion Torrent platform

Ion Torrent released a set of paired end datasets over the Christmas holiday. The interesting news is discussed in this article on Omics! Omics! It is quite incouraging to read that they are apparently able to reduce indel errors by about 5-fold on an E.coli DH10B dataset using this procedure.

Ion Torrent released a set of paired end datasets over the Christmas holiday. The interesting news is discussed in this article on Omics! Omics! It is quite incouraging to read that they are apparently able to reduce indel errors by about 5-fold on an E.coli DH10B dataset using this procedure.

Sunday, 8 January 2012

Flash Report: a soft genome

Here we go with a new category of posts on this blog: Flash Reports. Whenever we want to share an interesting news article with our readers but we do not have time to write a post for the NGS blog, we will create a "Flash Report" with a brief description and the link to the original article.

Here we go with a new category of posts on this blog: Flash Reports. Whenever we want to share an interesting news article with our readers but we do not have time to write a post for the NGS blog, we will create a "Flash Report" with a brief description and the link to the original article.BGI ... GPU ... NGS...???

Don't feel guilty for playing games